Spatial Audio Guide

INTRODUCTION

This guide presents a practical overview of how spatial audio is produced, performed, and supported across different venues. It introduces a selection of spaces involved in the SANE project and describes the concrete workflows through which spatial audio is realised in each of them. Rather than focusing on formats or theoretical models, the guide aims to make visible how spatial audio is actually worked with in practice.

Spatial audio is not a single technique or standardised system. It emerges from the interaction between artistic intentions, technical infrastructures, and the spatial and organisational conditions of a venue. Each space develops its own way of working with immersive sound, shaped by architecture, production culture, and the roles of artists and technical teams. This guide approaches spatial audio as a situated practice, embedded in specific working environments.

Some venues operate permanently installed spatial audio systems that provide a stable and repeatable framework for artistic work. Others rely on modular or temporary setups that are assembled specifically for individual productions. In these cases, spatial audio becomes a process of translation between artistic ideas, technical possibilities, and the physical space, often requiring close collaboration between artists and technicians.

Across all venues, spatial audio extends far beyond loudspeaker configurations. It includes decisions about signal routing, bass management, networked audio, control protocols such as OSC, and the software environments used for composition and performance. These technical choices directly affect how artists access the system, how they rehearse, and how spatial sound can be shaped in real time.

Spatial audio also influences the performative and organisational structure of events. In several venues, artists perform inside the loudspeaker setup itself, sharing the same acoustic space as the audience and challenging conventional stage-based concert formats. Monitoring strategies, rehearsal time, and system access are negotiated differently than in traditional live music contexts, reshaping both performance practice and audience experience.

The integration of spatial audio with visual media further reflects the diversity of approaches. Some venues prioritise sound as the primary medium and introduce visuals only when explicitly required by a project. Others support complex audiovisual constellations involving projection, lighting, and timecode-based synchronisation. In each case, these decisions are part of a broader production workflow rather than isolated technical features.

The following venue articles outline these workflows in detail. They describe how spatial audio is implemented, who controls it, and how artists work within each environment. Together, they form a spatial audio guide that offers insight into different models of practice and provides a practical reference for artists, technicians, curators, and producers working with immersive sound.

ECHO FACTORY with Venue HELLERAU – European Centre for the Arts Dresden

At HELLERAU, spatial audio is understood as a flexible, project-based practice rather than a fixed technical installation. Immersive sound setups are assembled specifically for each production and can be adapted in scale, geometry, and complexity depending on artistic requirements. This approach allows spatial audio to remain closely tied to the conceptual and performative needs of each work.

The venue works with a modular immersive loudspeaker system that supports multi-layered spatial arrangements, including elevated and overhead elements. Because the setup is not permanently installed, it can be reconfigured for different concert formats, stage situations, or audience layouts. Bass handling is similarly adaptable: low-frequency content can be spatialised within systems such as 4DSOUND or treated conventionally as non-spatialised auxiliary sends, depending on the artistic intent.

Spatialisation responsibilities vary by project. In some cases, artists control spatial audio directly within their own software environments. In others, HELLERAU’s sound designers act as intermediaries, translating artistic concepts into the performance space and adapting them to the specific acoustic and technical conditions. This collaborative model supports both technically experienced spatial audio artists and those who prefer to focus on compositional or performative aspects.

HELLERAU does not rely on a permanently dedicated rendering computer, but dedicated systems can be provided when required. Current infrastructure includes high-performance computers integrated with Dante-based audio networking, and future expansions include dedicated spatial audio hardware such as TiMax systems. Dante Audio-over-IP plays a central role in the venue’s overall audio infrastructure and is an important consideration for artists planning to work at HELLERAU.

Artists typically access the spatial audio system via standard digital audio workstations such as Ableton Live, Reaper, or Nuendo. Both discrete multichannel approaches and encoded or object-based workflows are possible, depending on the project. OSC is supported and can be used to control spatial parameters, though its role varies from project to project and can range from local control within an artist’s laptop to more complex networked setups.

Live audio inputs are available and can be integrated either through the artist’s own interfaces or via HELLERAU’s Dante-based infrastructure. Monitoring follows the spatial philosophy of the space: artists usually perform within the loudspeaker setup itself, without separate stage monitoring. This shared spatial environment between performers and audience is a defining aspect of many productions at HELLERAU.

Audiovisual integration is possible and has been used in selected projects, combining multichannel sound with multi-projection video setups. Video playback and capture are supported through professional hardware, and audio-video synchronisation is typically achieved via timecode. Lighting control is coordinated with the venue’s lighting department and can be integrated using established protocols, depending on advance planning and project needs.

Network connectivity is provided via the venue’s institutional infrastructure, offering high theoretical bandwidth, though artists are advised to plan conservatively due to potential access restrictions or maintenance. Tracking technologies are currently not part of the standard setup.

Overall, HELLERAU offers a highly adaptable environment for spatial audio, characterised by modular infrastructure, strong reliance on networked audio, and close collaboration between artists and technical staff. This makes the venue particularly suited for projects that require custom spatial configurations and flexible production workflows.

Effenaar (in collaboration with 4DSOUND)

For the SANE project at Effenaar, spatial audio was realised in close collaboration with 4DSOUND, bringing an object-based spatial audio approach into a concert venue context. Rather than adapting spatial audio to an existing club setup, the venue was temporarily transformed into an immersive listening environment, with spatialisation at the core of both artistic and technical decisions.

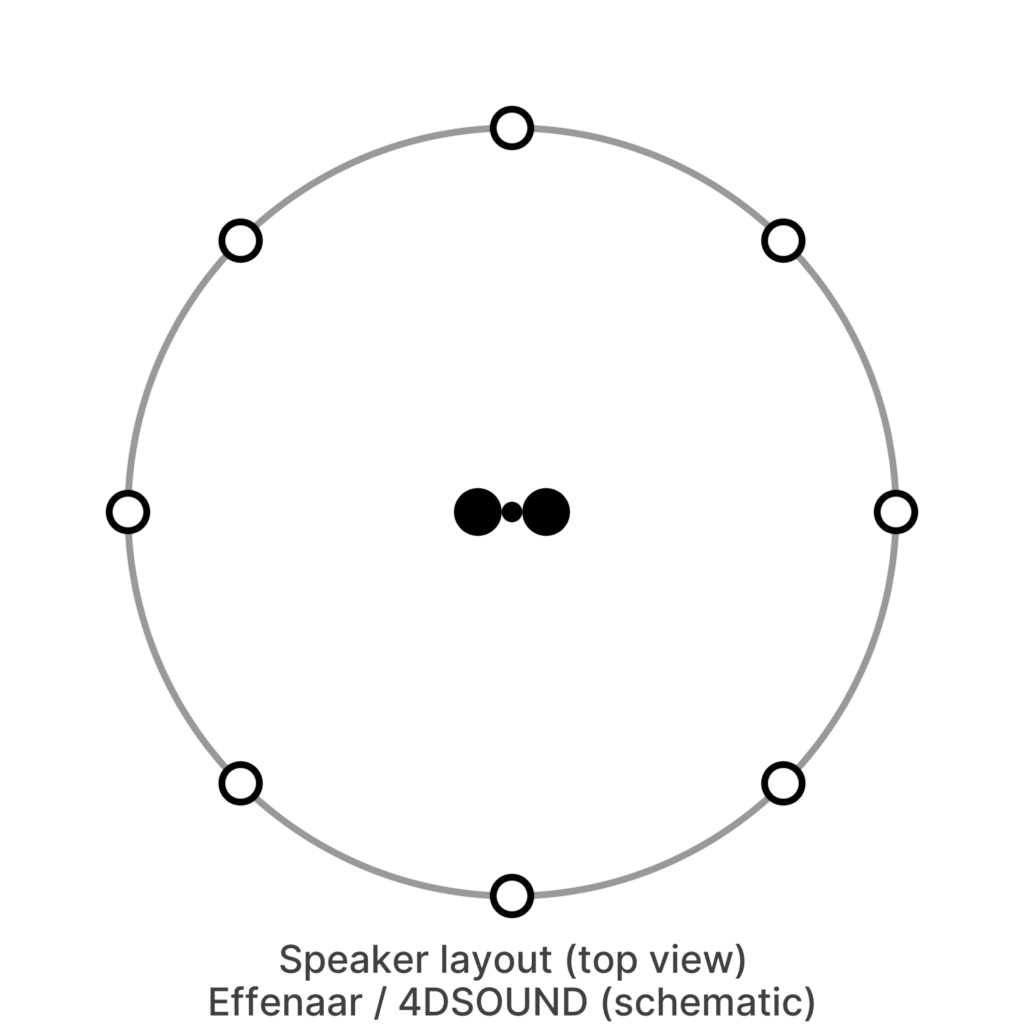

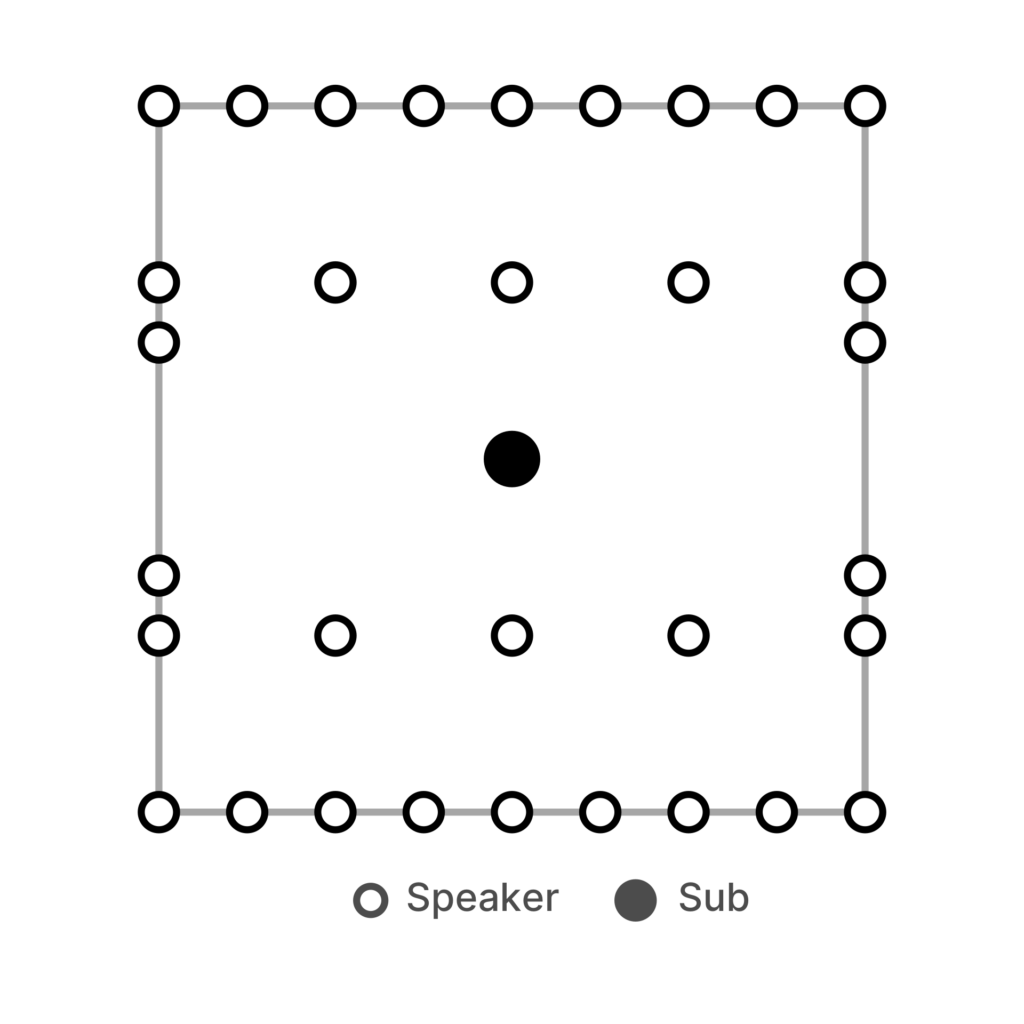

The spatial audio system was based on a circular loudspeaker arrangement surrounding the audience, complemented by additional elevated speakers. Low-frequency content was handled via a dedicated subwoofer feed, separated from the spatialised sound objects. Amplification and system control were designed to support precise timing and dynamic range, ensuring stable object-based rendering throughout performances.

Spatialisation was shared between technical direction and artistic control. While the overall system was supervised by 4DSOUND, artists were largely responsible for shaping spatial behaviour themselves, using familiar tools and workflows. This allowed spatial movement, scale, and perceptual effects to become integral compositional parameters rather than post-production elements.

Depending on artistic needs, performances were realised either with dedicated rendering machines or entirely within the artist’s own setup. When required, high-performance computers were provided to run the 4DSOUND engine and associated plugins. Artists accessed the spatial system through the 4DSOUND software environment, which automatically connected to the loudspeaker setup and exposed spatial parameters via OSC and a custom audio protocol.

The spatial audio workflow was fully object-based, with sound sources treated as independent entities that were rendered in real time to discrete loudspeakers. OSC played a central role in controlling spatial parameters such as position, movement, scale, and animation, enabling expressive and dynamic spatial compositions. Ableton Live and Max/MSP were commonly used as host environments, alongside spatialisation tools such as the 4DSOUND plugins, Panoramix, and Spat5.

Live audio inputs were supported and integrated into the spatial system with low latency. Instruments and microphones were routed through a mixing console and audio interfaces into the artists’ DAWs, processed by spatialisation plugins, and sent to the 4DSOUND engine for rendering. Overall system latency remained low enough to support live performance without perceptible delay.

Performers were typically positioned at the centre of the loudspeaker setup, sharing the same acoustic space as the audience. This eliminated the need for conventional stage monitoring and reinforced the immersive nature of the performances. Streaming and recording were not a primary focus of the setup for SANE, as the emphasis was placed on the in-room spatial experience.

Visual elements were used selectively, with some artists incorporating projections as part of their performances. Lighting followed standard venue operation and was not tightly integrated with the spatial audio system. Networked control via OSC was available, but audiovisual and lighting systems remained largely independent from the sound spatialisation workflow.

One of the main challenges in realising spatial audio at Effenaar was organisational rather than technical: ensuring sufficient setup and rehearsal time, and clearly communicating that the event differed fundamentally from a conventional pop concert. The collaboration with 4DSOUND enabled a robust and artist-driven spatial audio environment, demonstrating how immersive sound practices can be temporarily embedded into an existing music venue without compromising artistic depth.

Music Innovation Hub – La Capsula (INTORNO Labs)

La Capsula, operated by INTORNO Labs within the Music Innovation Hub, is conceived as a dedicated spatial audio venue where immersive sound is not an add-on but the primary architectural and artistic condition. The space is designed for three-dimensional listening and composition, enabling artists to work directly with spatial relationships as an integral part of their practice.

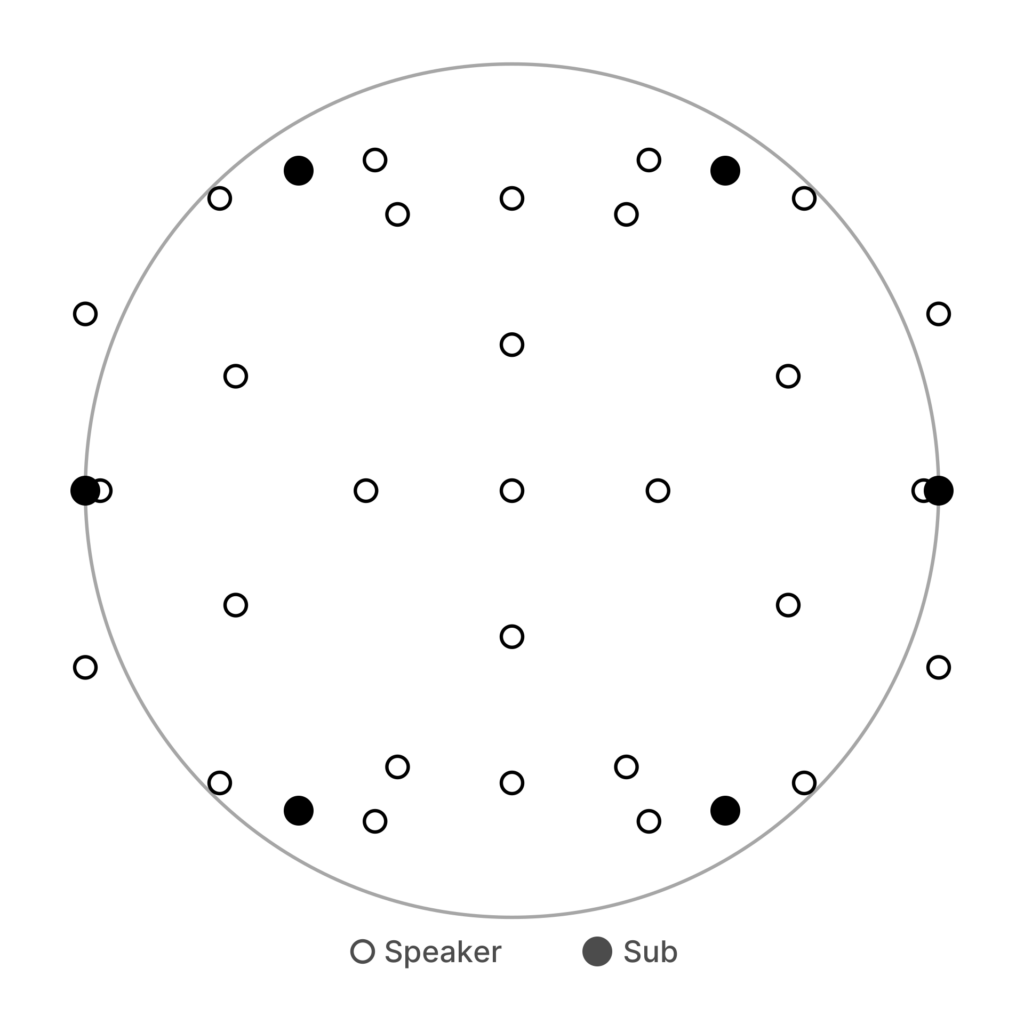

Spatial audio at La Capsula is realised through a dense, full-range multichannel system that surrounds the audience horizontally and vertically. Sound is treated as a collection of discrete elements that can be positioned, moved, and shaped throughout the space. Low-frequency content is handled via a dedicated mono subwoofer feed, separated from the spatialised channels to maintain clarity and control in the low end.

The spatialisation workflow is centred around the INTORNO Labs DQS Engine, which serves as the core system for sound distribution and spatial control. Artists can access the system either via OSC or through discrete audio channels that are pre-mapped to specific buses. This dual approach allows both technically advanced spatial workflows and more straightforward multichannel routing, depending on the artist’s experience and needs.

Responsibility for spatialisation typically lies with the artists themselves, supported by resident technicians when required. This setup encourages hands-on exploration and makes spatial sound a performative and compositional tool rather than a purely technical process. The system supports a range of working styles, from fully pre-composed spatial structures to live, performative spatial manipulation.

A dedicated rendering computer is available on site, running the INTORNO DQS Engine. Artists can also integrate their own computers into the system. Standard digital audio workstations such as Ableton Live, Logic, and Reaper are commonly used. Rather than relying on third-party spatialisation plugins, the setup focuses on the native tools provided by the DQS Engine, with additional bridge plugins available when required.

Live audio inputs are available and can be integrated through a stagebox providing multiple input channels. These inputs can be spatialised in real time, enabling performances with live instruments, voices, or external sound sources. Monitoring follows the spatial philosophy of the venue: there is no traditional stage and no separate monitor system. Artists and audience share the same acoustic space, and performers typically rely on in-ear monitoring if needed.

La Capsula places less emphasis on audiovisual integration by default, keeping the focus on sound. Visual systems, screens, or cameras are only introduced when specifically requested by artists or productions. Lighting control is minimal and non-programmatic, limited to basic adjustments such as dimming and colour, without complex DMX-based show control.

When required, La Capsula can support video playback, capture, and streaming using professional broadcast hardware. Audio–video synchronisation is achieved via timecode, ensuring reliable alignment for more complex productions. Network connectivity is provided via fibre-based infrastructure, supporting high data throughput, although spatial audio workflows are largely designed to operate independently of external network dependencies.

Overall, La Capsula represents a purpose-built environment for spatial audio experimentation and performance. Its emphasis on discrete-channel spatialisation, artist-driven control, and shared performer–audience space makes it particularly well suited for immersive concerts, research-oriented productions, and exploratory spatial sound practices within the SANE project.

ZiMMT – Zentrum für immersive Medienkunst und Technologie

ZiMMT works with spatial audio as a stable, permanently installed infrastructure that is designed to support a wide range of artistic approaches. Unlike project-based or temporary setups, spatial audio at ZiMMT is an integral part of the venue’s everyday operation, offering artists a consistent and technically reliable environment for immersive sound production and presentation.

The venue operates a hemispherical multichannel loudspeaker system that enables precise spatial placement across horizontal and vertical dimensions. Spatial audio is typically realised using discrete audio channels, allowing artists to address individual loudspeakers or groups directly. At the same time, ZiMMT remains flexible: object-based workflows or Ambisonics decoding can be integrated when required, without forcing artists to adapt their own production environments.

Low-frequency content is handled separately through dedicated LFE channels. These can be addressed either directly by the artist or managed in collaboration with ZiMMT technicians, depending on the project. This separation ensures clarity in the spatial image while maintaining control over low-end energy in the room.

A key aspect of ZiMMT’s approach is that no fixed rendering computer is required. Artists can work entirely on their own machines, or alternatively use a computer provided by the venue. Audio distribution and system access are based on a Dante Audio-over-IP infrastructure, which allows multichannel audio to be routed flexibly and reliably between computers, interfaces, and the loudspeaker system. Artists typically connect either via Dante Virtual Soundcard on their own machines or through dedicated Dante interfaces provided on site.

Control data and spatial metadata can be transmitted via OSC across the venue’s network. OSC is used flexibly, depending on the spatialisation approach chosen by the artist. ZiMMT provides a dedicated network for audio and control data and usually concentrates spatial processing on one or a small number of machines to ensure system stability.

Artists commonly work with digital audio workstations such as Reaper or Ableton Live. For spatial control, ZiMMT supports a variety of tools rather than enforcing a single solution. Plugins and systems such as Grapes, custom OSC patches, or hardware controllers are frequently used, and artists are free to integrate their own custom workflows.

Live audio input is available but not mandatory. When needed, audio can be captured through analogue or AES-based stageboxes in combination with a digital mixing console, or directly via multiple audio interfaces on the artist’s computer. In all cases, live inputs are routed into the Dante network and become available as multichannel signals within the spatial audio system.

Monitoring follows the immersive logic of the space. Rather than relying on conventional stage monitoring, artists typically work within the same loudspeaker environment as the audience. For documentation and dissemination, ZiMMT offers binaural recording using a dummy head, as well as alternative microphone setups that can be integrated into the spatial workflow.

Overall, ZiMMT provides a robust and open spatial audio environment that balances technical reliability with artistic freedom. Its permanently installed system, networked audio infrastructure, and flexible approach to spatial control make it well suited for both experimental projects and repeatable production workflows within the SANE project.